Centos7安裝部署Kubernetes(K8s)集群

Kubernetes集群的安裝有多種方式:下載源碼包編譯安裝、下載編譯好的二進(jìn)制包安裝、使用kubeadm工具安裝等。本文是以二進(jìn)制文件方式安裝Kubernetes集群。

系統(tǒng)環(huán)境

成都創(chuàng)新互聯(lián)是一家集網(wǎng)站建設(shè),龍文企業(yè)網(wǎng)站建設(shè),龍文品牌網(wǎng)站建設(shè),網(wǎng)站定制,龍文網(wǎng)站建設(shè)報(bào)價(jià),網(wǎng)絡(luò)營(yíng)銷,網(wǎng)絡(luò)優(yōu)化,龍文網(wǎng)站推廣為一體的創(chuàng)新建站企業(yè),幫助傳統(tǒng)企業(yè)提升企業(yè)形象加強(qiáng)企業(yè)競(jìng)爭(zhēng)力。可充分滿足這一群體相比中小企業(yè)更為豐富、高端、多元的互聯(lián)網(wǎng)需求。同時(shí)我們時(shí)刻保持專業(yè)、時(shí)尚、前沿,時(shí)刻以成就客戶成長(zhǎng)自我,堅(jiān)持不斷學(xué)習(xí)、思考、沉淀、凈化自己,讓我們?yōu)楦嗟钠髽I(yè)打造出實(shí)用型網(wǎng)站。

| 主機(jī)名 | IP地址 | 操作系統(tǒng) | 安裝組件 |

|---|---|---|---|

| k8s-master | 192.168.2.212 | Centos 7.5 64位 | etcd、kube-apiserver、kube-controller-manager、kube-scheduler |

| k8s-node1 | 192.168.2.213 | Centos 7.5 64位 | kubelet、kube-proxy |

| k8s-node2 | 192.168.2.214 | Centos 7.5 64位 | kubelet、kube-proxy |

| k8s-node3 | 192.168.2.215 | Centos 7.5 64位 | kubelet、kube-proxy |

一、全局操作(所有機(jī)器執(zhí)行)

1、安裝需要用到的工具

yum -y install vim bash-completion wget注:安裝bash-completion工具后,使用tab鍵可以實(shí)現(xiàn)長(zhǎng)格式參數(shù)補(bǔ)全,非常方便。kubectl命令的參數(shù)都是長(zhǎng)格式,對(duì)于有些命令都記不住的我,更別說長(zhǎng)格式參數(shù)了。

2、關(guān)閉firewalld防火墻

Kubernetes的master(管理主機(jī))與node(工作節(jié)點(diǎn))之間會(huì)有大量的網(wǎng)絡(luò)通信,安全的做法是在防火墻上配置各組件需要相互通信的端口號(hào),關(guān)于防火墻的配置我會(huì)在后續(xù)博文中單獨(dú)講解。在一個(gè)安全的內(nèi)部網(wǎng)絡(luò)環(huán)境中建議關(guān)閉防火墻服務(wù),這里我們關(guān)閉防火墻來部署測(cè)試環(huán)境。

systemctl disable firewalld

systemctl stop firewalld3、關(guān)閉SELinux

禁用SELinux的目的是讓容器可以讀取主機(jī)文件系統(tǒng)

sed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

sed -i 's/SELINUXTYPE=targeted/#&/' /etc/selinux/config

setenforce 0二、部署master管理節(jié)點(diǎn)

1、安裝CFSSL

[root@k8s-master ~]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64 -O /usr/local/bin/cfssl

[root@k8s-master ~]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64 -O /usr/local/bin/cfssljson

[root@k8s-master ~]# wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64 -O /usr/local/bin/cfssl-certinfo

[root@k8s-master ~]# chmod +x /usr/local/bin/cfssl /usr/local/bin/cfssljson /usr/local/bin/cfssl-certinfo2、下載并解壓已編譯好的二進(jìn)制包

[root@k8s-master tmp]# wget https://github.com/etcd-io/etcd/releases/download/v3.3.10/etcd-v3.3.10-linux-amd64.tar.gz

[root@k8s-master tmp]# wget https://dl.k8s.io/v1.12.2/kubernetes-server-linux-amd64.tar.gz

[root@k8s-master tmp]# tar zxvf etcd-v3.3.10-linux-amd64.tar.gz

[root@k8s-master tmp]# tar zxvf kubernetes-server-linux-amd64.tar.gz3、將可執(zhí)行文件復(fù)制到/usr/bin目錄下

[root@k8s-master tmp]# cd etcd-v3.3.10-linux-amd64/

[root@k8s-master etcd-v3.3.10-linux-amd64]# cp -p etcd etcdctl /usr/bin/

[root@k8s-master etcd-v3.3.10-linux-amd64]# cd /tmp/kubernetes/server/bin/

[root@k8s-master bin]# cp -p kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/bin/4、配置etcd服務(wù)

注:etcd作為kubernetes集群的數(shù)據(jù)庫,保存著所有資源對(duì)象的數(shù)據(jù),安全起見,使用數(shù)字證書認(rèn)證方式。生產(chǎn)環(huán)境建議將etcd獨(dú)立出來,單獨(dú)部署etcd集群。

(1)生成CA證書配置文件

[root@k8s-master bin]# mkdir -p /etc/{etcd/ssl,kubernetes/ssl}

[root@k8s-master bin]# cd /etc/etcd/ssl/

[root@k8s-master ssl]# cfssl print-defaults config > ca-config.json

[root@k8s-master ssl]# cfssl print-defaults csr > ca-csr.json(2)修改配置文件

修改ca-config.json文件,設(shè)置有效期43800h(5年)

{

"signing": {

"default": {

"expiry": "43800h"

},

"profiles": {

"kubernetes": {

"expiry": "43800h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}"server auth","client auth"表示服務(wù)端和客戶端使用相同的證書驗(yàn)證。

修改ca-csr.json文件,內(nèi)容如下

{

"CN": "k8s-master",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

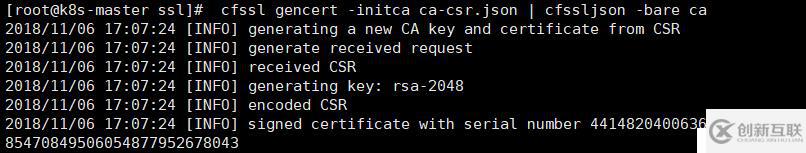

}(3)生成CA證書和私鑰相關(guān)文件

[root@k8s-master ssl]# cfssl gencert -initca ca-csr.json | cfssljson -bare ca

(4)簽發(fā)etcd證書文件

[root@k8s-master ssl]# cfssl print-defaults csr > etcd-csr.json修改server-csr.json文件,內(nèi)容如下

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"192.168.2.212"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai",

"O": "k8s",

"OU": "System"

}

]

}生成etcd證書和私鑰

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes -hostname=127.0.0.1,192.168.2.212 etcd-csr.json | cfssljson -bare etcd注:"hosts"里填寫所有etcd主機(jī)的IP,-hostname填寫當(dāng)前主機(jī)的IP,也可以填寫所有etcd主機(jī)的IP,這樣其他etcd節(jié)點(diǎn)就不需要再創(chuàng)建證書和私鑰了,拷貝過去直接使用。 -profile=kubernetes這個(gè)值根據(jù)對(duì)應(yīng)ca-config.json文件中的profiles字段的值。

(5)創(chuàng)建生成etcd配置文件的腳本

[root@k8s-master ssl]# cd /root/

[root@k8s-master ~]# vim etcd.sh#!/bin/bash

etcd_data_dir=/data/etcd

mkdir -p ${etcd_data_dir}

ETCD_NAME=${1:-"etcd"}

ETCD_LISTEN_IP=${2:-"192.168.2.212"}

ETCD_INITIAL_CLUSTER=${3:-}

cat <<EOF >//etc/etcd/etcd.conf

# [member]

ETCD_NAME="${ETCD_NAME}"

ETCD_DATA_DIR="${etcd_data_dir}/default.etcd"

#ETCD_SNAPSHOT_COUNTER="10000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

ETCD_LISTEN_PEER_URLS="https://${ETCD_LISTEN_IP}:2380"

ETCD_LISTEN_CLIENT_URLS="https://${ETCD_LISTEN_IP}:2379,https://127.0.0.1:2379"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

#ETCD_CORS=""

#

#[cluster]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://${ETCD_LISTEN_IP}:2380"

ETCD_INITIAL_CLUSTER="${ETCD_INITIAL_CLUSTER}"

ETCD_INITIAL_CLUSTER_STATE="new"

ETCD_INITIAL_CLUSTER_TOKEN="k8s-etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="https://${ETCD_LISTEN_IP}:2379"

#

#[proxy]

#ETCD_PROXY="off"

#

#[security]

CLIENT_CERT_AUTH="true"

ETCD_CA_FILE="/etc/etcd/ssl/ca.pem"

ETCD_CERT_FILE="/etc/etcd/ssl/${ETCD_NAME}.pem"

ETCD_KEY_FILE="/etc/etcd/ssl/${ETCD_NAME}-key.pem"

PEER_CLIENT_CERT_AUTH="true"

ETCD_PEER_CA_FILE="/etc/etcd/ssl/ca.pem"

ETCD_PEER_CERT_FILE="/etc/etcd/ssl/${ETCD_NAME}.pem"

ETCD_PEER_KEY_FILE="/etc/etcd/ssl/${ETCD_NAME}-key.pem"

EOF

cat <<EOF >//usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

[Service]

Type=simple

WorkingDirectory=${etcd_data_dir}

EnvironmentFile=-/etc/etcd/etcd.conf

ExecStart=/bin/bash -c "GOMAXPROCS=\$(nproc) /usr/bin/etcd"

Type=notify

[Install]

WantedBy=multi-user.target

EOF(6)執(zhí)行腳本,生成配置文件和啟動(dòng)文件

[root@k8s-master ~]# sh etcd.sh5、配置kube-apiserver服務(wù)

(1)創(chuàng)建生成apiserver配置文件的腳本

apiserver.sh腳本內(nèi)容如下

#!/usr/bin/env bash

MASTER_ADDRESS=${1:-"192.168.2.212"}

ETCD_SERVERS=${2:-"https://127.0.0.1:2379"}

SERVICE_CLUSTER_IP_RANGE=${3:-"10.10.10.0/24"}

ADMISSION_CONTROL=${4:-""}

API_LOGDIR=${5:-"/data/apiserver/log"}

mkdir -p ${API_LOGDIR}

cat <<EOF >/etc/kubernetes/kube-apiserver

# --logtostderr=true: log to standard error instead of files

KUBE_LOGTOSTDERR="--logtostderr=false"

APISERVER_LOGDIR="--log-dir=${API_LOGDIR}"

# --v=0: log level for V logs

KUBE_LOG_LEVEL="--v=2"

# --etcd-servers=[]: List of etcd servers to watch (http://ip:port),

# comma separated. Mutually exclusive with -etcd-config

KUBE_ETCD_SERVERS="--etcd-servers=${ETCD_SERVERS}"

# --etcd-cafile="": SSL Certificate Authority file used to secure etcd communication.

KUBE_ETCD_CAFILE="--etcd-cafile=/etc/etcd/ssl/ca.pem"

# --etcd-certfile="": SSL certification file used to secure etcd communication.

KUBE_ETCD_CERTFILE="--etcd-certfile=/etc/etcd/ssl/etcd.pem"

# --etcd-keyfile="": key file used to secure etcd communication.

KUBE_ETCD_KEYFILE="--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem"

# --insecure-bind-address=127.0.0.1: The IP address on which to serve the --insecure-port.

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

# --insecure-port=8080: The port on which to serve unsecured, unauthenticated access.

KUBE_API_PORT="--insecure-port=8080"

# --kubelet-port=10250: Kubelet port

NODE_PORT="--kubelet-port=10250"

# --advertise-address=<nil>: The IP address on which to advertise

# the apiserver to members of the cluster.

KUBE_ADVERTISE_ADDR="--advertise-address=${MASTER_ADDRESS}"

# --allow-privileged=false: If true, allow privileged containers.

KUBE_ALLOW_PRIV="--allow-privileged=false"

# --service-cluster-ip-range=<nil>: A CIDR notation IP range from which to assign service cluster IPs.

# This must not overlap with any IP ranges assigned to nodes for pods.

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=${SERVICE_CLUSTER_IP_RANGE}"

# --admission-control="AlwaysAdmit": Ordered list of plug-ins

# to do admission control of resources into cluster.

KUBE_ADMISSION_CONTROL="--admission-control=${ADMISSION_CONTROL}"

EOF

KUBE_APISERVER_OPTS=" \${KUBE_LOGTOSTDERR} \\

\${APISERVER_LOGDIR} \\

\${KUBE_LOG_LEVEL} \\

\${KUBE_ETCD_SERVERS} \\

\${KUBE_ETCD_CAFILE} \\

\${KUBE_ETCD_CERTFILE} \\

\${KUBE_ETCD_KEYFILE} \\

\${KUBE_API_ADDRESS} \\

\${KUBE_API_PORT} \\

\${NODE_PORT} \\

\${KUBE_ADVERTISE_ADDR} \\

\${KUBE_ALLOW_PRIV} \\

\${KUBE_SERVICE_ADDRESSES} \\

\${KUBE_ADMISSION_CONTROL} \\

\${KUBE_API_CLIENT_CA_FILE} \\

\${KUBE_API_TLS_CERT_FILE} \\

\${KUBE_API_TLS_PRIVATE_KEY_FILE}"

cat <<EOF >/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-apiserver

ExecStart=/usr/bin/kube-apiserver ${KUBE_APISERVER_OPTS}

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF啟動(dòng)參數(shù)說明:

--logtostderr:設(shè)置為false,表示將日志寫入文件,不寫入stderr。

--log-dir:日志文件目錄。

--v:日志級(jí)別。

--etcd-servers:指定etcd服務(wù)的URL地址。

--etcd-cafile:連接etcd的ca根證書文件路徑。

--etcd-certfile:連接etcd的私鑰文件路徑。

--etcd-keyfile:連接etcd的key證書文件路徑。

--insecure-bind-address:apiserver的非安全I(xiàn)P地址,此參數(shù)已棄用,后面會(huì)替換成新的。

--insecure-port:apiserver的非安全端口號(hào),此參數(shù)已棄用,后面會(huì)替換成新的。

--kubelet-port:kubelet的端口號(hào),此參數(shù)已棄用,后面會(huì)去掉。

--advertise-address:apiserver主機(jī)的IP地址,用于通知其他集群成員。

--allow-privileged:是否允許容器運(yùn)行在 privileged 模式,默認(rèn)為false。

--service-cluster-ip-range:集群中service的虛擬IP地址范圍。

--admission-control:集群的準(zhǔn)入控制設(shè)置,各控制模塊以插件的形式依次生效。此參數(shù)已棄用,后面會(huì)替換成新的。(2)執(zhí)行腳本,生成配置文件和啟動(dòng)文件

[root@k8s-master ~]# sh apiserver.sh6、配置kube-controller-manager服務(wù)

(1)創(chuàng)建生成controller-manager配置文件的腳本

controller-manager.sh腳本內(nèi)容如下

#!/usr/bin/env bash

MASTER_ADDRESS=${1:-"192.168.2.212"}

CON_LOGDIR=${2:-"/data/controller-manager/log"}

mkdir -p ${CON_LOGDIR}

cat <<EOF >/etc/kubernetes/kube-controller-manager

KUBE_LOGTOSTDERR="--logtostderr=false"

CONTROLLER_LOGDIR="--log-dir=${CON_LOGDIR}"

KUBE_LOG_LEVEL="--v=2"

KUBE_MASTER="--master=${MASTER_ADDRESS}:8080"

# --root-ca-file="": If set, this root certificate authority will be included in

# service account's token secret. This must be a valid PEM-encoded CA bundle.

KUBE_CONTROLLER_MANAGER_ROOT_CA_FILE="--root-ca-file=/etc/kubernetes/ca.pem"

# --service-account-private-key-file="": Filename containing a PEM-encoded private

# RSA key used to sign service account tokens.

KUBE_CONTROLLER_MANAGER_SERVICE_ACCOUNT_PRIVATE_KEY_FILE="--service-account-private-key-file=/etc/kubernetes/k8s-server-key.pem"

# --leader-elect: Start a leader election client and gain leadership before

# executing the main loop. Enable this when running replicated components for high availability.

KUBE_LEADER_ELECT="--leader-elect"

EOF

KUBE_CONTROLLER_MANAGER_OPTS=" \${KUBE_LOGTOSTDERR} \\

\${CONTROLLER_LOGDIR} \\

\${KUBE_LOG_LEVEL} \\

\${KUBE_MASTER} \\

\${KUBE_CONTROLLER_MANAGER_ROOT_CA_FILE} \\

\${KUBE_CONTROLLER_MANAGER_SERVICE_ACCOUNT_PRIVATE_KEY_FILE}\\

\${KUBE_LEADER_ELECT}"

cat <<EOF >/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-controller-manager

ExecStart=/usr/bin/kube-controller-manager ${KUBE_CONTROLLER_MANAGER_OPTS}

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF(2)執(zhí)行腳本,生成配置文件和啟動(dòng)文件

[root@k8s-master ~]# sh controller-manager.sh7、配置kube-scheduler服務(wù)

(1)創(chuàng)建生成scheduler配置文件的腳本

scheduler.sh腳本內(nèi)容如下

#!/usr/bin/env bash

MASTER_ADDRESS=${1:-"192.168.2.212"}

SCH_LOGDIR=${2:-"/data/scheduler/log"}

mkdir -p ${SCH_LOGDIR}

cat <<EOF >/etc/kubernetes/kube-scheduler

###

# kubernetes scheduler config

# --logtostderr=true: log to standard error instead of files

KUBE_LOGTOSTDERR="--logtostderr=false"

SCHEDULER_LOGDIR="--log-dir=${SCH_LOGDIR}"

# --v=0: log level for V logs

KUBE_LOG_LEVEL="--v=4"

# --master: The address of the Kubernetes API server (overrides any value in kubeconfig).

KUBE_MASTER="--master=${MASTER_ADDRESS}:8080"

# --leader-elect: Start a leader election client and gain leadership before

# executing the main loop. Enable this when running replicated components for high availability.

KUBE_LEADER_ELECT="--leader-elect"

# Add your own!

KUBE_SCHEDULER_ARGS=""

EOF

KUBE_SCHEDULER_OPTS=" \${KUBE_LOGTOSTDERR} \\

\${SCHEDULER_LOGDIR} \\

\${KUBE_LOG_LEVEL} \\

\${KUBE_MASTER} \\

\${KUBE_LEADER_ELECT} \\

\$KUBE_SCHEDULER_ARGS"

cat <<EOF >/usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-scheduler

ExecStart=/usr/bin/kube-scheduler ${KUBE_SCHEDULER_OPTS}

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF(3)執(zhí)行腳本,生成配置文件和啟動(dòng)文件

[root@k8s-master ~]# sh scheduler.sh8、啟動(dòng)master主機(jī)所有服務(wù)

[root@k8s-master ~]# systemctl daemon-reload

[root@k8s-master ~]# systemctl enable etcd

[root@k8s-master ~]# systemctl start etcd

[root@k8s-master ~]# systemctl enable kube-apiserver

[root@k8s-master ~]# systemctl start kube-apiserver

[root@k8s-master ~]# systemctl enable kube-controller-manager

[root@k8s-master ~]# systemctl start kube-controller-manager

[root@k8s-master ~]# systemctl enable kube-scheduler

[root@k8s-master ~]# systemctl start kube-scheduler9、驗(yàn)證etcd運(yùn)行狀態(tài)

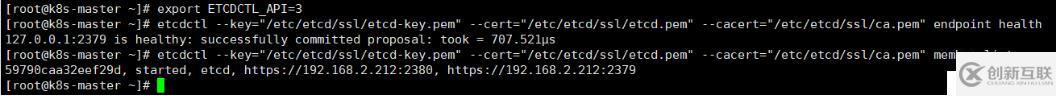

[root@k8s-master ~]# export ETCDCTL_API=3

[root@k8s-master ~]# etcdctl --key="/etc/etcd/ssl/etcd-key.pem" --cert="/etc/etcd/ssl/etcd.pem" --cacert="/etc/etcd/ssl/ca.pem" endpoint health

[root@k8s-master ~]# etcdctl --key="/etc/etcd/ssl/etcd-key.pem" --cert="/etc/etcd/ssl/etcd.pem" --cacert="/etc/etcd/ssl/ca.pem" member list

ETCDCTL_API=3表示使用etcd3.x版本的命令,由于我們配置了etcd的證書,所以etcdctl命令要帶上證書。

三、部署node工作節(jié)點(diǎn)

1、部署docker環(huán)境

(1)安裝docker

注:安裝的是docker社區(qū)版本,版本號(hào)18.06.1-ce

[root@k8s-node1 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s-node1 ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s-node1 ~]# yum makecache fast

[root@k8s-node1 ~]# yum -y install docker-ce(2)修改配置文件,添加私有倉庫地址和阿里云鏡像地址,并指定docker數(shù)據(jù)存儲(chǔ)目錄

[root@k8s-node1 ~]# mkdir -p /data/docker

[root@k8s-node1 ~]# mkdir -p /etc/docker

[root@k8s-node1 ~]# vim /etc/docker/daemon.json{

"registry-mirrors": ["https://registry.docker-cn.com"], "graph": "/data/docker",

"insecure-registries": ["192.168.2.225:5000"]

}(3)啟動(dòng)docker,并加入開機(jī)啟動(dòng)

[root@k8s-node1 ~]# systemctl start docker

[root@k8s-node1 ~]# systemctl enable docker2、下載并解壓已編譯好的二進(jìn)制包

[root@k8s-node1 tmp]# wget https://dl.k8s.io/v1.12.2/kubernetes-node-linux-amd64.tar.gz

[root@k8s-node1 tmp]# tar zxvf kubernetes-node-linux-amd64.tar.gz3、將可執(zhí)行文件復(fù)制到/usr/bin目錄下

[root@k8s-node1 tmp]# cd kubernetes/node/bin/

[root@k8s-node1 bin]# cp -p kubectl kubelet kube-proxy /usr/bin/4、配置kubelet服務(wù)

(1)創(chuàng)建生成kubelet配置文件的腳本

kubelet.sh腳本內(nèi)容如下

#!/usr/bin/env bash

MASTER_ADDRESS=${1:-"192.168.2.212"}

NODE_ADDRESS=${2:-"192.168.2.213"}

KUBECONFIG_DIR=${KUBECONFIG_DIR:-/etc/kubernetes}

NODE_LOGDIR=${3:-"/data/kubelet/log"}

mkdir -p ${KUBECONFIG_DIR}

mkdir -p ${NODE_LOGDIR}

# Generate a kubeconfig file

cat <<EOF > "${KUBECONFIG_DIR}/kubelet.kubeconfig"

apiVersion: v1

kind: Config

clusters:

- cluster:

server: http://${MASTER_ADDRESS}:8080/

name: local

contexts:

- context:

cluster: local

name: local

current-context: local

EOF

cat <<EOF >/etc/kubernetes/kubelet

# --logtostderr=true: log to standard error instead of files

KUBE_LOGTOSTDERR="--logtostderr=false"

KUBELET_LOGDIR="--log-dir=${NODE_LOGDIR}"

# --v=0: log level for V logs

KUBE_LOG_LEVEL="--v=2"

# --hostname-override="": If non-empty, will use this string as identification instead of the actual hostname.

NODE_HOSTNAME="--hostname-override=${NODE_ADDRESS}"

# Path to a kubeconfig file, specifying how to connect to the API server.

KUBELET_KUBECONFIG="--kubeconfig=${KUBECONFIG_DIR}/kubelet.kubeconfig"

# Add your own!

KUBELET_ARGS="--kubeconfig=${KUBECONFIG_DIR}/kubelet.kubeconfig --hostname-override=${NODE_ADDRESS} --logtostderr=false --log-dir=${NODE_LOGDIR} --v=2"

EOF

KUBELET_OPTS=" \${KUBE_LOGTOSTDERR} \\

\${KUBELET_LOGDIR} \\

\${KUBE_LOG_LEVEL} \\

\${NODE_HOSTNAME} \\

\${KUBELET_KUBECONFIG}"

cat <<EOF >/usr/lib/systemd/system/kubelet.service

[Unit]

Description=Kubernetes Kubelet

After=docker.service

Requires=docker.service

[Service]

EnvironmentFile=-/etc/kubernetes/kubelet

ExecStart=/usr/bin/kubelet ${KUBELET_OPTS}

Restart=on-failure

KillMode=process

RestartSec=15s

[Install]

WantedBy=multi-user.target

EOF(2)執(zhí)行腳本,生成配置文件和啟動(dòng)文件

[root@k8s-node1 ~]# sh kubelet.sh5、配置kube-proxy服務(wù)

(1)創(chuàng)建生成kube-proxy配置文件的腳本

proxy.sh腳本內(nèi)容如下

#!/usr/bin/env bash

MASTER_ADDRESS=${1:-"192.168.2.212"}

NODE_ADDRESS=${2:-"192.168.2.213"}

mkdir -p /data/proxy/log

cat <<EOF >/etc/kubernetes/kube-proxy

# --logtostderr=true: log to standard error instead of files

KUBE_LOGTOSTDERR="--logtostderr=false"

PROXY_LOGDIR="--log-dir=/data/proxy/log"

# --v=0: log level for V logs

KUBE_LOG_LEVEL="--v=2"

# --hostname-override="": If non-empty, will use this string as identification instead of the actual hostname.

NODE_HOSTNAME="--hostname-override=${NODE_ADDRESS}"

# --master="": The address of the Kubernetes API server (overrides any value in kubeconfig)

KUBE_MASTER="--master=http://${MASTER_ADDRESS}:8080"

EOF

KUBE_PROXY_OPTS=" \${KUBE_LOGTOSTDERR} \\

\${PROXY_LOGDIR} \\

\${KUBE_LOG_LEVEL} \\

\${NODE_HOSTNAME} \\

\${KUBE_MASTER}"

cat <<EOF >/usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/kube-proxy

ExecStart=/usr/bin/kube-proxy ${KUBE_PROXY_OPTS}

Restart=on-failure

[Install]

WantedBy=multi-user.target

EOF(2)執(zhí)行腳本,生成配置文件和啟動(dòng)文件

[root@k8s-node1 ~]# sh proxy.sh6、啟動(dòng)node節(jié)點(diǎn)服務(wù)

[root@k8s-node1 ~]# swapoff -a

[root@k8s-node1 ~]# systemctl daemon-reload

[root@k8s-node1 ~]# systemctl enable kubelet.service

[root@k8s-node1 ~]# systemctl start kubelet.service

[root@k8s-node1 ~]# systemctl enable kube-proxy.service

[root@k8s-node1 ~]# systemctl start kube-proxy.service使用swapoff -a命令關(guān)閉swap交換分區(qū),否則kubelet會(huì)啟動(dòng)不了。

至此kubernetes(k8s)集群就搭建完成了,此時(shí)只有etcd是通過https連的,其他服務(wù)都是通過http連的,接下來我們就將其他服務(wù)也配置成https,也使用CA數(shù)字證書認(rèn)證方式。

四、Kubernetes集群的安全設(shè)置

在一個(gè)安全的內(nèi)網(wǎng)環(huán)境中,Kubernetes的各個(gè)組件與Master之間可以通過apiserver的非安全端口http://apiserver:8080 進(jìn)行訪問。但如果apiserver需要對(duì)外提供服務(wù),或者集群中的某些容器也需要訪問apiserver以獲取集群中的某些信息,則更安全的做法是啟用HTTPS安全機(jī)制。Kubernetes提供了基于CA簽名的雙向數(shù)字證書認(rèn)證方式和簡(jiǎn)單的基于HTTP BASE或TOKEN的認(rèn)證方式,其中CA證書方式的安全性最高。現(xiàn)在我們就來配置基于CA簽名的數(shù)字證書認(rèn)證方式。

1、關(guān)閉node節(jié)點(diǎn)所有服務(wù)

[root@k8s-node1 ~]# systemctl stop kube-proxy.service

[root@k8s-node1 ~]# systemctl stop kubelet.service2、關(guān)閉master主機(jī)除etcd外的所有服務(wù)

[root@k8s-master ~]# systemctl stop kube-scheduler.service

[root@k8s-master ~]# systemctl stop kube-controller-manager.service

[root@k8s-master ~]# systemctl stop kube-apiserver.service3、生成各組件的證書和私鑰

(1)復(fù)制CA根證書和私鑰相關(guān)文件到存放kubernetes證書私鑰文件的目錄下

[root@k8s-master ~]# cd /etc/etcd/ssl/

[root@k8s-master ssl]# cp ca.pem ca-config.json ca-key.pem etcd-csr.json /etc/kubernetes/ssl/

[root@k8s-master ssl]# cd /etc/kubernetes/ssl/(2)查看cluster role都有哪些用戶

[root@k8s-master ssl]# kubectl get clusterrole注:apiserver使用admin用戶,controller-manager使用system:kube-controller-manager用戶,scheduler使用system:kube-scheduler用戶,kubelet和kube-proxy使用system:node用戶,用戶名對(duì)應(yīng)json文件的CN(憑證)。記住用戶名和組件一定要一一對(duì)應(yīng),否則其他組件會(huì)連不上apiserver。

(3)編輯apiserver-csr.json文件

[root@k8s-master ssl]# mv etcd-csr.json apiserver-csr.json

[root@k8s-master ssl]# vim apiserver-csr.json注:apiserver的證書和私鑰使用apiserver-csr.json文件來創(chuàng)建,使用admin用戶

{

"CN": "admin",

"hosts": [

"127.0.0.1",

"192.168.2.212"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai",

"O": "admin",

"OU": "system"

}

]

}(4)編輯kube-controller-manager的k8s-csr.json文件

注:kube-controller-manager的證書和私鑰使用k8s-csr.json文件來創(chuàng)建,使用system:kube-controller-manager用戶

{

"CN": "system:kube-controller-manager",

"hosts": [

"127.0.0.1",

"192.168.2.212"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai",

"O": "controller",

"OU": "system"

}

]

}(5)編輯scheduler-csr.json文件

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"192.168.2.212"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai",

"O": "kube-scheduler",

"OU": "system"

}

]

}(6)編輯node-csr.json文件

{

"CN": "system:node",

"hosts": [

"127.0.0.1",

"192.168.2.212",

"192.168.2.213",

"192.168.2.214",

"192.168.2.215"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "ShangHai",

"ST": "ShangHai",

"O": "node",

"OU": "system"

}

]

}(7)生成各組件的證書和私鑰文件

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes -hostname=127.0.0.1,192.168.2.212 apiserver-csr.json | cfssljson -bare apiserver

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes -hostname=127.0.0.1,192.168.2.212 k8s-csr.json | cfssljson -bare k8s

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes -hostname=127.0.0.1,192.168.2.212 scheduler-csr.json | cfssljson -bare scheduler

[root@k8s-master ssl]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes -hostname=127.0.0.1,192.168.2.212,192.168.2.213,192.168.2.214,192.168.2.215 node-csr.json | cfssljson -bare node4、將證書文件和私鑰文件復(fù)制到三臺(tái)node節(jié)點(diǎn)上

(1)在node節(jié)點(diǎn)上創(chuàng)建存放證書文件的目錄

[root@k8s-node1 ~]# mkdir -p /etc/kubernetes/ssl(2)將文件復(fù)制到node節(jié)點(diǎn)上

[root@k8s-master ssl]# scp ca.pem node.pem node-key.pem root@192.168.2.213:/etc/kubernetes/ssl/注:另外兩臺(tái)請(qǐng)?zhí)鎿Q命令中的IP

5、配置kube-apiserver服務(wù)

(1)修改kube-apiserver配置文件,/etc/kubernetes/kube-apiserver

KUBE_LOGTOSTDERR="--logtostderr=false"

APISERVER_LOGDIR="--log-dir=/data/apiserver/log"

KUBE_LOG_LEVEL="--v=2"

KUBE_ETCD_SERVERS="--etcd-servers=https://127.0.0.1:2379"

KUBE_ETCD_CAFILE="--etcd-cafile=/etc/etcd/ssl/ca.pem"

KUBE_ETCD_CERTFILE="--etcd-certfile=/etc/etcd/ssl/etcd.pem"

KUBE_ETCD_KEYFILE="--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem"

KUBE_API_ADDRESS="--bind-address=0.0.0.0"

KUBE_API_PORT="--secure-port=6443"

KUBE_ADVERTISE_ADDR="--advertise-address=192.168.2.212"

KUBE_ALLOW_PRIV="--allow-privileged=true"

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.10.10.0/24"

KUBE_MODE_CONTROL="--authorization-mode=Node,RBAC"

KUBE_ADMISSION_CONTROL="--enable-admission-plugins=NodeRestriction"

KUBE_API_CLIENT_CA_FILE="--client-ca-file=/etc/kubernetes/ssl/ca.pem"

KUBE_API_TLS_CERT_FILE="--tls-cert-file=/etc/kubernetes/ssl/apiserver.pem"

KUBE_API_TLS_PRIVATE_KEY_FILE="--tls-private-key-file=/etc/kubernetes/ssl/apiserver-key.pem"(2)修改systemd啟動(dòng)配置文件,/usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-apiserver

ExecStart=/usr/bin/kube-apiserver ${KUBE_LOGTOSTDERR} \

${APISERVER_LOGDIR} \

${KUBE_LOG_LEVEL} \

${KUBE_ETCD_SERVERS} \

${KUBE_ETCD_CAFILE} \

${KUBE_ETCD_CERTFILE} \

${KUBE_ETCD_KEYFILE} \

${KUBE_API_ADDRESS} \

${KUBE_API_PORT} \

${KUBE_ADVERTISE_ADDR} \

${KUBE_ALLOW_PRIV} \

${KUBE_SERVICE_ADDRESSES} \

${KUBE_MODE_CONTROL} \

${KUBE_ADMISSION_CONTROL} \

${KUBE_API_CLIENT_CA_FILE} \

${KUBE_API_TLS_CERT_FILE} \

${KUBE_API_TLS_PRIVATE_KEY_FILE}

Restart=on-failure

[Install]

WantedBy=multi-user.target(3)啟動(dòng)kube-apiserver服務(wù)

[root@k8s-master ssl]# systemctl daemon-reload

[root@k8s-master ssl]# systemctl start kube-apiserver.service6、配置kube-controller-manager服務(wù)

(1)創(chuàng)建config配置文件,/etc/kubernetes/kubeconfig

[root@k8s-master kubernetes]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --kubeconfig=kubeconfig

[root@k8s-master kubernetes]# kubectl config set-credentials system:kube-controller-manager --client-certificate=/etc/kubernetes/ssl/k8s.pem --client-key=/etc/kubernetes/ssl/k8s-key.pem --embed-certs=true --kubeconfig=kubeconfig

[root@k8s-master kubernetes]# kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kubeconfig

[root@k8s-master kubernetes]# kubectl config use-context system:kube-controller-manager --kubeconfig=kubeconfig(2)修改kube-controller-manager配置文件,/etc/kubernetes/kube-controller-manager

KUBE_LOGTOSTDERR="--logtostderr=false"

CON_LOGDIR="--log-dir=/data/controller-manager/log"

KUBE_LOG_LEVEL="--v=2"

KUBE_MASTER="--master=https://192.168.2.212:6443"

KUBE_CONTROLLER_MANAGER_ROOT_CA_FILE="--root-ca-file=/etc/kubernetes/ssl/ca.pem"

KUBE_CONTROLLER_MANAGER_SERVICE_ACCOUNT_PRIVATE_KEY_FILE="--service-account-private-key-file=/etc/kubernetes/ssl/k8s-key.pem"

KUBE_CONFIG_FILE="--kubeconfig=/etc/kubernetes/kubeconfig"

KUBE_LEADER_ELECT="--leader-elect"(3)修改systemd啟動(dòng)配置文件,/usr/lib/systemd/system/kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-controller-manager

ExecStart=/usr/bin/kube-controller-manager ${KUBE_LOGTOSTDERR} \

${CON_LOGDIR} \

${KUBE_LOG_LEVEL} \

${KUBE_MASTER} \

${KUBE_CONTROLLER_MANAGER_ROOT_CA_FILE} \

${KUBE_CONTROLLER_MANAGER_SERVICE_ACCOUNT_PRIVATE_KEY_FILE}\

${KUBE_CONFIG_FILE} \

${KUBE_LEADER_ELECT}

Restart=on-failure

[Install]

WantedBy=multi-user.target(4)啟動(dòng)kube-controller-manager服務(wù)

[root@k8s-master kubernetes]# systemctl daemon-reload

[root@k8s-master kubernetes]# systemctl start kube-controller-manager.service(5)創(chuàng)建角色綁定

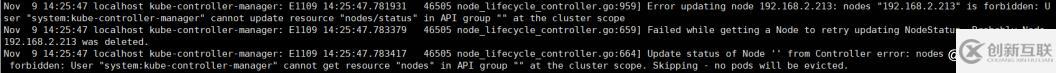

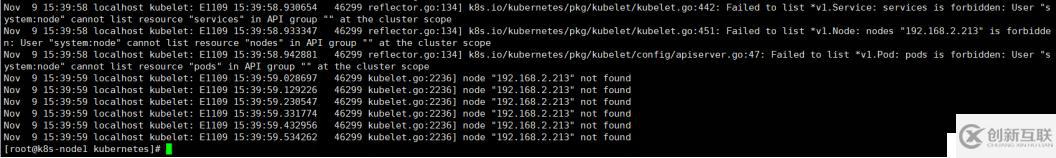

kube-controller-manager服務(wù)啟動(dòng)后,查看日志報(bào)如下錯(cuò)誤

從報(bào)錯(cuò)來看是rbac的授權(quán)錯(cuò)誤,node信息的維護(hù)是屬于system:controller下面的用戶維護(hù)的,用戶system:kube-controller-manager沒有權(quán)限造成的。需要將system:kube-controller-manager綁定到system:controller:node-controller用戶下即可。

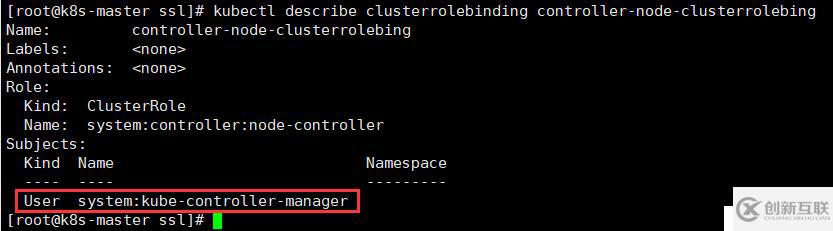

[root@k8s-master ssl]# kubectl create clusterrolebinding controller-node-clusterrolebing --clusterrole=system:controller:node-controller --user=system:kube-controller-manager查看綁定信息

[root@k8s-master ssl]# kubectl describe clusterrolebinding controller-node-clusterrolebing

現(xiàn)在再查看日志就沒有上面的報(bào)錯(cuò)了,但還是有cluster的錯(cuò)誤。

將system:kube-controller-manager綁定到cluster-admin用戶下

[root@k8s-master ssl]# kubectl create clusterrolebinding controller-cluster-clusterrolebing --clusterrole=cluster-admin --user=system:kube-controller-manager7、配置kube-scheduler服務(wù)

(1)創(chuàng)建config配置文件,/etc/kubernetes/scheconfig

[root@k8s-master kubernetes]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --kubeconfig=scheconfig

[root@k8s-master kubernetes]# kubectl config set-credentials system:kube-scheduler --client-certificate=/etc/kubernetes/ssl/scheduler.pem --client-key=/etc/kubernetes/ssl/scheduler-key.pem --embed-certs=true --kubeconfig=scheconfig

[root@k8s-master kubernetes]# kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=scheconfig

[root@k8s-master kubernetes]# kubectl config use-context system:kube-scheduler --kubeconfig=scheconfig(2)修改kube-scheduler配置文件,/etc/kubernetes/kube-scheduler

KUBE_LOGTOSTDERR="--logtostderr=false"

KUBE_LOGDIR="--log-dir=/data/scheduler/log"

KUBE_LOG_LEVEL="--v=2"

KUBE_MASTER="--master=https://192.168.2.212:6443"

SCHEDULER_CONFIG_FILE="--kubeconfig=/etc/kubernetes/scheconfig"

KUBE_LEADER_ELECT="--leader-elect"

KUBE_SCHEDULER_ARGS=""(3)修改systemd啟動(dòng)配置文件,/usr/lib/systemd/system/kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-scheduler

ExecStart=/usr/bin/kube-scheduler ${KUBE_LOGTOSTDERR} \

${KUBE_LOGDIR} \

${KUBE_LOG_LEVEL} \

${KUBE_MASTER} \

${SCHEDULER_CONFIG_FILE} \

${KUBE_LEADER_ELECT} \

$KUBE_SCHEDULER_ARGS

Restart=on-failure

[Install]

WantedBy=multi-user.target(4)啟動(dòng)kube-scheduler服務(wù)

[root@k8s-master kubernetes]# systemctl daemon-reload

[root@k8s-master kubernetes]# systemctl start kube-scheduler8、配置kubelet服務(wù)

(1)創(chuàng)建kubelet和kube-proxy的config配置文件,/etc/kubernetes/nodeconfig

[root@k8s-node1 kubernetes]# kubectl config set-cluster kubernetes --certificate-authority=/etc/kubernetes/ssl/ca.pem --embed-certs=true --server=https://192.168.2.212:6443 --kubeconfig=nodeconfig

[root@k8s-node1 kubernetes]# kubectl config set-credentials system:node --client-certificate=/etc/kubernetes/ssl/node.pem --client-key=/etc/kubernetes/ssl/node-key.pem --embed-certs=true --kubeconfig=nodeconfig

[root@k8s-node1 kubernetes]# kubectl config set-context system:node --cluster=kubernetes --user=system:node --kubeconfig=nodeconfig

[root@k8s-node1 kubernetes]# kubectl config use-context system:node --kubeconfig=nodeconfig(2)修改kubelet配置文件,/etc/kubernetes/kubelet

KUBE_LOGTOSTDERR="--logtostderr=false"

NODE_LOGDIR="--log-dir=/data/kubelet/log"

KUBE_LOG_LEVEL="--v=2"

NODE_HOSTNAME="--hostname-override=192.168.2.213"

KUBELET_KUBECONFIG="--kubeconfig=/etc/kubernetes/nodeconfig"(3)啟動(dòng)kubelet服務(wù)

[root@k8s-node1 kubernetes]# systemctl start kubelet.service(4)創(chuàng)建角色綁定

kubelet啟動(dòng)后,報(bào)如下錯(cuò)誤

將system:node用戶也綁定到以上兩個(gè)角色中

[root@k8s-master ssl]# kubectl create clusterrolebinding node-node-clusterrolebing --clusterrole=system:controller:node-controller --user=system:node

[root@k8s-master ssl]# kubectl create clusterrolebinding node-cluster-clusterrolebing --clusterrole=cluster-admin --user=system:node9、配置kube-proxy服務(wù)

(1)修改kube-proxy配置文件,/etc/kubernetes/kube-proxy

KUBE_LOGTOSTDERR="--logtostderr=false"

PROXY_LOGDIR="--log-dir=/data/proxy/log"

KUBE_LOG_LEVEL="--v=2"

NODE_HOSTNAME="--hostname-override=192.168.2.213"

KUBE_MASTER="--master=https://192.168.2.212:6443"

PROXY_KUBECONFIG="--kubeconfig=/etc/kubernetes/nodeconfig"(2)修改systemd啟動(dòng)配置文件,/usr/lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Proxy

After=network.target

[Service]

EnvironmentFile=-/etc/kubernetes/kube-proxy

ExecStart=/usr/bin/kube-proxy ${KUBE_LOGTOSTDERR} \

${PROXY_LOGDIR} \

${KUBE_LOG_LEVEL} \

${NODE_HOSTNAME} \

${KUBE_MASTER} \

${PROXY_KUBECONFIG}

Restart=on-failure

[Install]

WantedBy=multi-user.target(3)啟動(dòng)kube-proxy服務(wù)

[root@k8s-node1 kubernetes]# systemctl daemon-reload

[root@k8s-node1 kubernetes]# systemctl start kube-proxy.service如果報(bào)以下錯(cuò)誤,需要先清空iptables的NAT規(guī)則

[root@k8s-node1 kubernetes]# iptables -F -t nat

[root@k8s-node1 kubernetes]# iptables -X -t nat

[root@k8s-node1 kubernetes]# iptables -Z -t nat

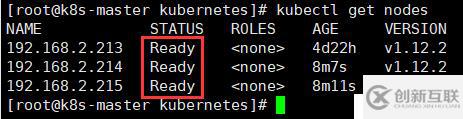

[root@k8s-node1 kubernetes]# systemctl restart kube-proxy.service(4)查看node狀態(tài)

[root@k8s-master kubernetes]# kubectl get nodes

至此kubernetes集群的部署就全部完成了,各組件之間都是通過https協(xié)議連接的。通過上面各組件的配置,我們不難看出所有組件都需要連接apiserver服務(wù),數(shù)據(jù)也是通過apiserver存儲(chǔ)到etcd中的,可以說apiserver是整個(gè)集群的中心組件。

五、測(cè)試

這里創(chuàng)建一個(gè)nginx的deployment用來測(cè)試

1、node節(jié)點(diǎn)下載pause鏡像

由于國(guó)內(nèi)訪問不了k8s.gcr.io/pause:3.1,所以這里從kocker網(wǎng)站下載pause鏡像

[root@k8s-node1 log]# docker pull docker.io/kubernetes/pause

[root@k8s-node1 log]# docker tag kubernetes/pause:latest k8s.gcr.io/pause:3.12、創(chuàng)建nginx的Deployment定義文件

nginx.yaml文件內(nèi)容如下

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: myweb

spec:

replicas: 1

selector:

matchLabels:

app: myweb

strategy:

type: RollingUpdate

template:

metadata:

labels:

app: myweb

spec:

containers:

- name: myweb

image: nginx

ports:

- containerPort: 803、創(chuàng)建deployment、RS、Pod和容器

[root@k8s-master ~]# kubectl create -f nginx.yaml4、查看創(chuàng)建好的deployment運(yùn)行情況

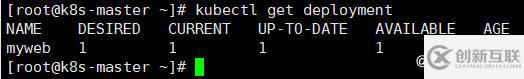

[root@k8s-master ~]# kubectl get deployment

5、查看ReplicaSet(RS)的運(yùn)行情況

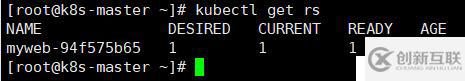

[root@k8s-master ~]# kubectl get rs

6、查看Pod的運(yùn)行情況

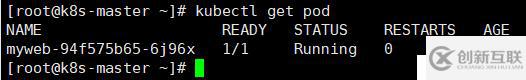

[root@k8s-master ~]# kubectl get pod

7、查看容器的運(yùn)行情況(node節(jié)點(diǎn))

[root@k8s-node1 log]# docker ps -a

8、創(chuàng)建nginx的service定義文件

myweb-svc.yaml文件的內(nèi)容如下

apiVersion: v1

kind: Service

metadata:

name: myweb

spec:

type: NodePort

ports:

- port: 80

nodePort: 30001

selector:

app: myweb9、創(chuàng)建Service

[root@k8s-master ~]# kubectl create -f myweb-svc.yaml10、查看Service的運(yùn)行情況

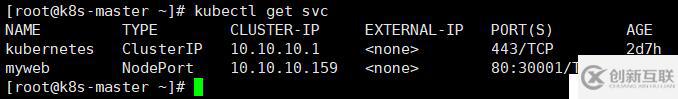

[root@k8s-master ~]# kubectl get svc

11、通過瀏覽器訪問

通過node節(jié)點(diǎn)的30001端口訪問,http://192.168.2.213:30001

分享題目:Centos7安裝部署Kubernetes(K8s)集群

本文來源:http://m.newbst.com/article38/gpjjpp.html

成都網(wǎng)站建設(shè)公司_創(chuàng)新互聯(lián),為您提供網(wǎng)站營(yíng)銷、品牌網(wǎng)站設(shè)計(jì)、電子商務(wù)、網(wǎng)站設(shè)計(jì)公司、小程序開發(fā)、ChatGPT

聲明:本網(wǎng)站發(fā)布的內(nèi)容(圖片、視頻和文字)以用戶投稿、用戶轉(zhuǎn)載內(nèi)容為主,如果涉及侵權(quán)請(qǐng)盡快告知,我們將會(huì)在第一時(shí)間刪除。文章觀點(diǎn)不代表本網(wǎng)站立場(chǎng),如需處理請(qǐng)聯(lián)系客服。電話:028-86922220;郵箱:631063699@qq.com。內(nèi)容未經(jīng)允許不得轉(zhuǎn)載,或轉(zhuǎn)載時(shí)需注明來源: 創(chuàng)新互聯(lián)

- 網(wǎng)站內(nèi)鏈變動(dòng)屬于頁面更新嗎 2023-03-13

- 網(wǎng)站內(nèi)鏈要如何優(yōu)化才能提升網(wǎng)站排名 2023-04-11

- 煙臺(tái)SEOSEO優(yōu)化課程,如何擔(dān)保網(wǎng)站內(nèi)鏈鏈接的精確度? 2023-02-03

- 解析關(guān)于網(wǎng)站內(nèi)鏈優(yōu)化的一些小知識(shí) 2021-11-08

- 淺析網(wǎng)站內(nèi)鏈錨文本的建設(shè) 2016-10-31

- 網(wǎng)站內(nèi)鏈,增加有排名的內(nèi)頁權(quán)重,提升網(wǎng)站關(guān)鍵詞排名 2021-08-24

- 應(yīng)該怎樣去優(yōu)化網(wǎng)站內(nèi)鏈 2022-06-17

- 企業(yè)網(wǎng)站內(nèi)鏈建設(shè)重要嗎?怎樣做才有效 2015-10-02

- 網(wǎng)站內(nèi)鏈如何做,才能提高網(wǎng)站排名? 2013-07-10

- 網(wǎng)站內(nèi)鏈如何優(yōu)化 2021-09-29

- 網(wǎng)站內(nèi)鏈建設(shè)的重要性是什么? 2022-06-02

- 建設(shè)網(wǎng)站內(nèi)鏈,提高用戶體驗(yàn),引誘百度蜘蛛的到來 2022-06-15